LangGraph in Action: The Framework Powering Multi-Agent AI Systems

agents to sophisticated agentic AI systems capable of complex reasoning, planning, and multi-step execution

The realm of artificial intelligence is rapidly evolving, moving beyond simple conversational agents to sophisticated agentic AI systems capable of complex reasoning, planning, and multi-step execution. Traditional large language model (LLM) applications often face inherent limitations: they lack persistent memory, struggle with intricate orchestration, and can falter when confronted with non-linear decision-making. These stateless and often brittle systems pose significant challenges for developers striving to build production-grade AI applications that demand context retention, adaptive workflows, and robust reliability.

LangGraph emerges as a pivotal solution, offering a graph-based orchestration framework specifically engineered for constructing resilient, stateful AI agents that thrive on real-world complexity. Developed by the creators of LangChain, LangGraph redefines how developers conceptualize and implement AI workflows, providing a modular and visually intuitive approach to agent architecture that scales from straightforward chatbots to advanced multi-agent cognitive systems.

Core Principles: Understanding LangGraph's Foundation

What is LangGraph?

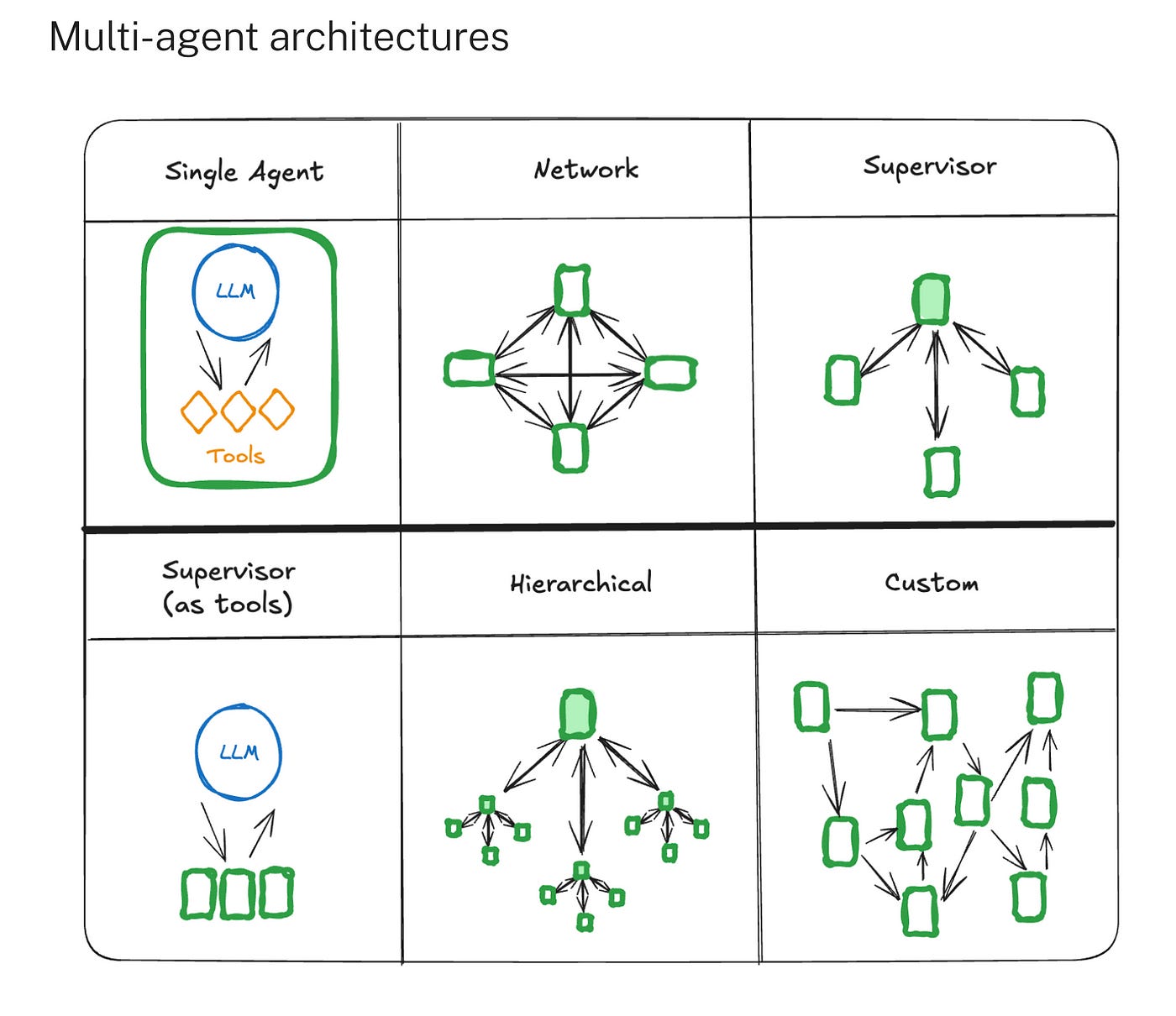

Fundamentally, LangGraph is a low-level orchestration framework with a strong graph-based architecture that enables developers to create, administer, and implement long-running, stateful agents. As opposed to traditional sequential chains, LangGraph represents workflows as directed graphs, with edges delineating the logical transitions triggered by choices or state changes, and individual nodes acting as modular processing units (functions, tools, LLM calls, prompts).

AI workflows are represented as interconnected nodes using this architectural paradigm, which makes use of graph-based execution. Whether it's an LLM interaction, data transformation, or integration with outside services, every node carries out a unique function. This methodical approach offers unmatched flexibility in integrating various processing steps into coherent and dynamic workflows, in addition to providing clear visual organization and modularity for creating reusable components.

The Power of Stateful Execution

The integrated state management features of LangGraph are among its most notable innovations. The framework makes use of an advanced dual-layer memory architecture that cleverly distinguishes between long-term persistent memory and short-term execution state. This comprises:

Using thread-scoped checkpoints, memory persistence carefully preserves conversational context throughout exchanges.

robust error handling that ensures system resilience through intelligent retry logic and structured recovery mechanisms.

thorough checkpoint systems that allow for smooth state recovery and conversation restarting, even during lengthy sessions.

Empowering Multi-Agent Collaboration

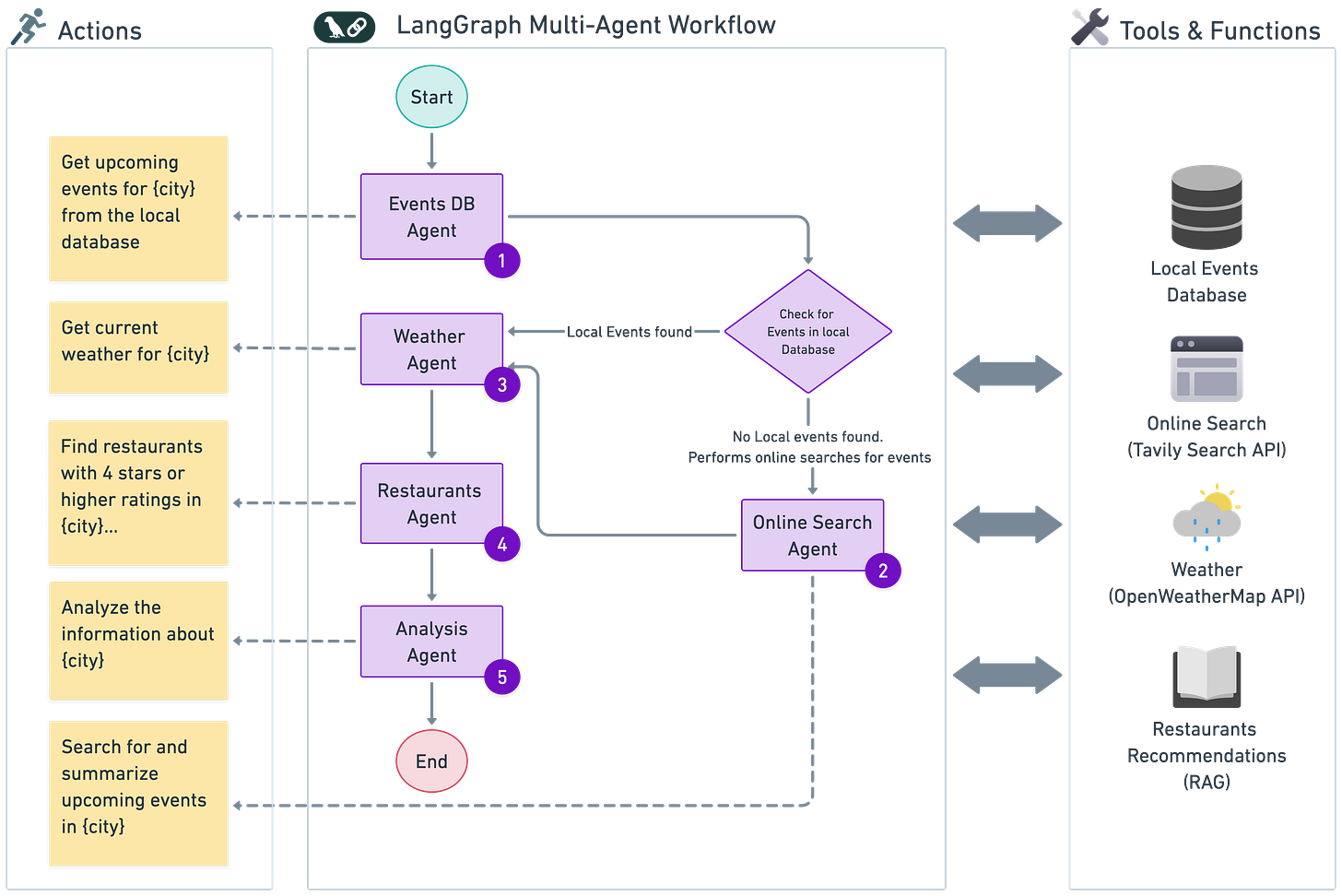

Advanced multi-agent coordination is fully supported by LangGraph, enabling specialized agents to cooperate, bargain, and assign tasks wisely within a single framework. This system makes it possible for several agents, each with a distinct role, to collaborate while exchanging vital information via clearly defined channels. Task specialization, parallel processing of subtasks, and dynamic workflows that adjust in response to collective agent feedback are all made possible by this collaborative model.

Seamless Interoperability

The framework easily integrates with the larger LangChain ecosystem, which includes a variety of LLM providers, custom Python functions, bespoke tools, a wide range of APIs, and LangSmith for advanced observability and debugging. Because of this built-in compatibility, developers can take advantage of their current investments in AI infrastructure and use LangGraph's sophisticated orchestration features at the same time.

How LangGraph Functions: A Deep Dive

Architectural Components

The three main parts of LangGraph's architecture work together to create potent AI workflows:

Nodes: Individual processing units that carry out particular tasks within the graph are known as nodes. After completing its assigned task and receiving the current state, a node usually returns an updated state. LLM calls for text generation, data transformation nodes for formatting and cleaning, and external integration nodes for interacting with APIs and services are examples of common node types.

Edges: These node-to-node connections specify how the graph's execution proceeds. There are several edge types supported by LangGraph: conditional edges that call a function to determine the next node based on criteria, normal edges for direct transitions, and designated entry/exit points that specify the start and end states of the workflow.

State: The shared data that moves between nodes is represented by the state, which carefully preserves context while the workflow is being carried out. Conversational messages, variables, and any application-specific information that nodes need to access and change are all contained in the state object.

Illustrative Control Flow Examples

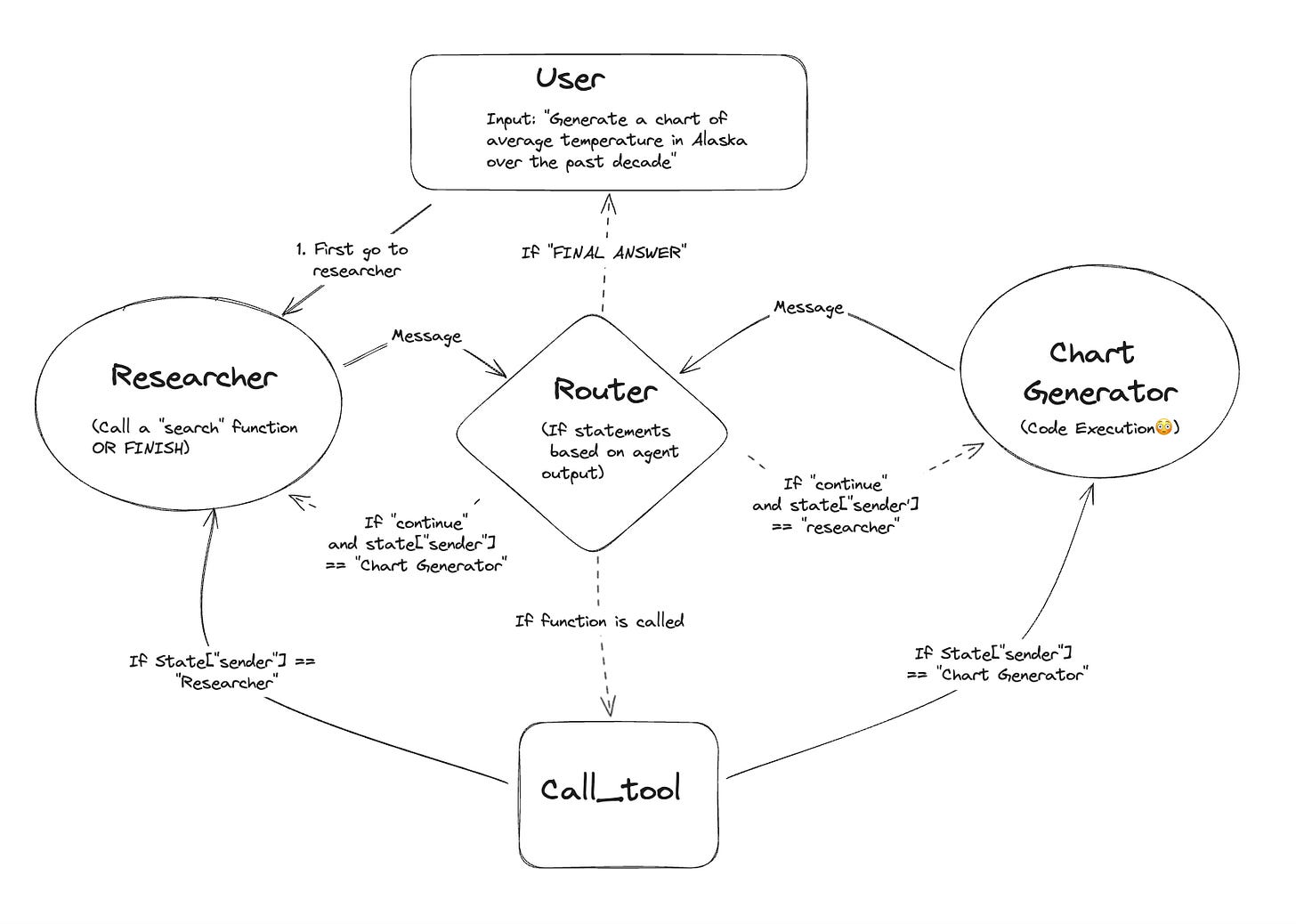

A vast range of intricate AI applications are made possible by LangGraph's control flow flexibility:

An example of a simple flow is a personal assistant workflow that starts with a greeting node to collect the user's name, moves on to a processing node to store this data, and ends with a humor node that provides a customized joke.

Branching Flow Example: Conditional logic is demonstrated by a highly advanced travel planning assistant. After an initial node collects user preferences, a conditional router checks to see if all required data is present. If it is not, it returns to the gathering stage; if it is, it searches for flights, hotels, and booking confirmation.

A content creation pipeline serves as an example of multi-agent collaboration. A writer agent creates the initial content, an editor agent evaluates and makes suggestions for improvements, a conditional router determines whether revisions are necessary, and a publisher agent formats and distributes the final product.

Technical Implementation Details

Program execution is defined by the framework using message passing, in which nodes exchange information by reading from and writing to a common state. A node passes its updated state to the next node in the execution path after finishing its processing. This sophisticated method makes it easier to create extremely intricate workflows with parallel execution paths, conditional branches, and loops.

Python

# Basic LangGraph structure

from langgraph.graph import StateGraph, START, END

from typing_extensions import TypedDict

class State(TypedDict):

messages: list

user_data: dict

graph_builder = StateGraph(State)

graph_builder.add_node("process_input", process_function)

graph_builder.add_edge("process_input", END)

app = graph_builder.compile()

LangGraph's Architecture: Beyond the Basics

The Graph as Cognitive Architecture

The development of complex reasoning patterns that closely resemble human decision-making processes is made possible by LangGraph's graph-based cognitive architecture. With a unified architecture, the framework skillfully supports a variety of control flows, including hierarchical, sequential, single-agent, and multi-agent patterns. Because of its exceptional adaptability, developers can represent intricate business logic as user-friendly, aesthetically pleasing workflows.

Nodes, state, commands, and events are the fundamental building blocks of the architecture. Nodes carry out particular tasks, state preserves important workflow context, commands control the flow of execution, and events set off reactions to outside stimuli.

The Robust LangGraph Runtime

Enterprise-level features offered by the LangGraph Runtime include robust error-handling procedures, persistent state management for lengthy workflows, and streaming output for real-time user feedback. In addition to offering a full suite of 30 API endpoints for creating highly customized user experiences, the runtime supports horizontal scaling to effectively handle long-running, bursty traffic.

Its streaming capabilities greatly improve system responsiveness and overall efficiency by enabling real-time data processing. This streaming functionality is especially helpful for intricate workflows where users need quick feedback while performing time-consuming tasks.

Advanced Error Recovery and Human-in-the-Loop Capabilities

Advanced error recovery features like automatic retries and graceful degradation are incorporated into LangGraph. Importantly, users can examine and even change agent state at any time during execution thanks to the framework's human-in-the-loop features, which facilitate smooth human oversight.

Developers can pause processing at predetermined points, save the current state, and resume when necessary thanks to the integrated checkpoint mechanism. Applications needing resumable execution, lengthy tasks, or processes requiring human intervention will greatly benefit from this feature.

Real-World Impact: LangGraph in Action

LangGraph is not merely a theoretical framework; it is actively shaping the future of AI in production environments across leading enterprises.

Enterprise Adoption Highlights

Klarna, serving 85 million active users, has strategically deployed LangGraph to power an AI-driven customer support assistant. This system effectively handles a workload equivalent to 700 full-time staff, managing over 2.5 million conversations spanning tasks from payment inquiries to refunds and escalations. LangGraph's controllable agent architecture significantly reduced latency, enhanced reliability, cut operational costs, and achieved an impressive 80% reduction in customer query resolution time.

Replit leverages LangGraph to enable real-time code generation through an AI copilot capable of building software from scratch. This multi-agent system incorporates human-in-the-loop functionalities, providing users transparent visibility into agent actions, from package installations to file creation.

Uber has significantly streamlined its development process by automating unit test generation using LangGraph, thereby reducing development time and improving code quality. The framework has markedly accelerated Uber's development cycle when scaling complex workflows.

AppFolio developed a property management copilot that demonstrably saves property managers over 10 hours per week. LangGraph's contribution helped them reduce application latency and double the accuracy of their decisions.

LinkedIn implemented a hierarchical agent system built on LangGraph to enhance candidate sourcing, matching, and messaging processes, allowing recruiters to dedicate more focus to strategic initiatives.

Diverse Industry Applications

Beyond these prominent enterprises, nearly 400 companies have successfully deployed agents into production using the LangGraph Platform since its beta launch. The framework is finding widespread application across diverse sectors for:

Intelligent shopping agents

Code-assist tools within integrated development environments (IDEs)

Multi-step authentication systems

Advanced message summarization

Autonomous scientific literature reviews

Complex multi-agent simulators

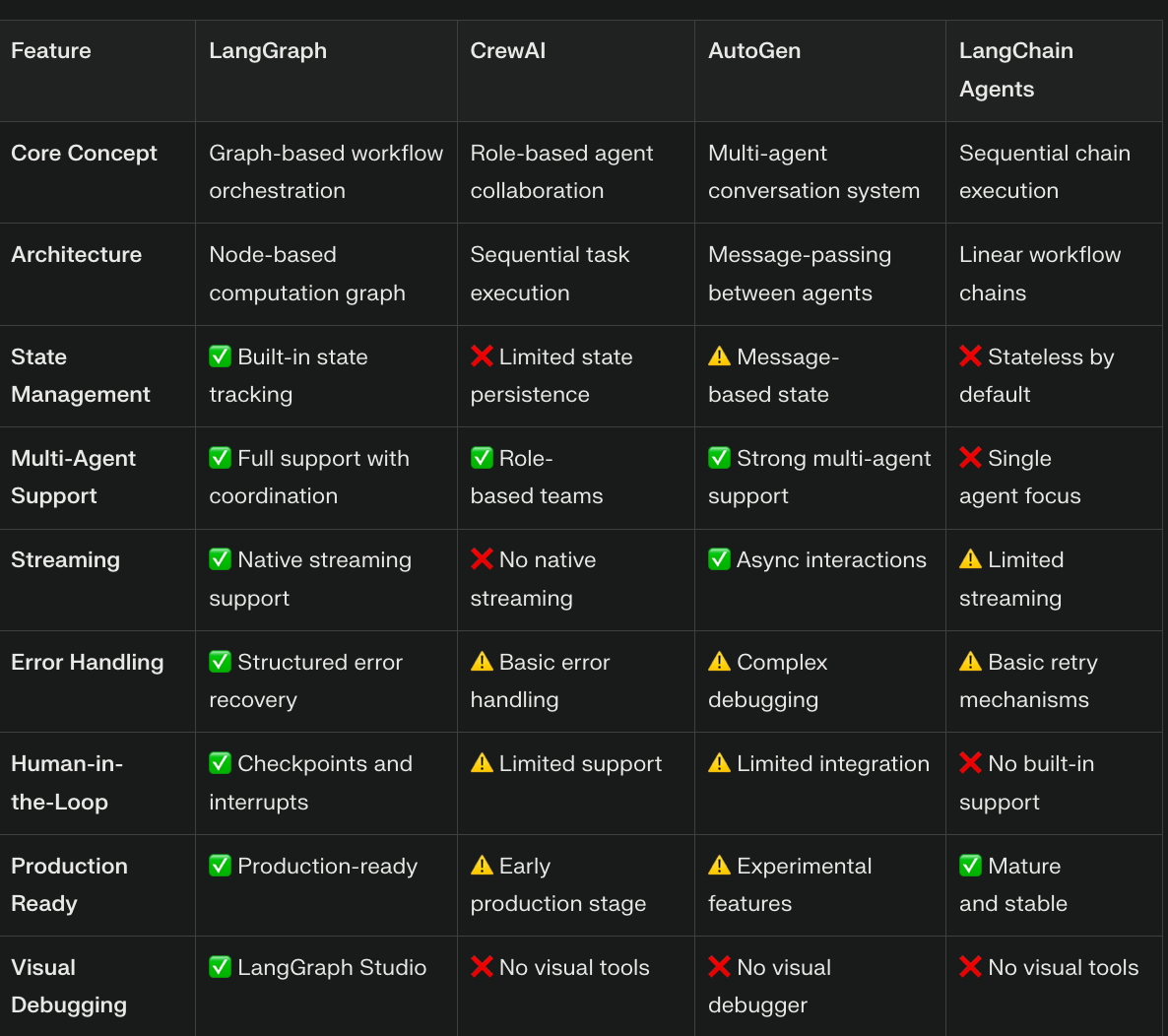

LangGraph vs. The Field: A Comparative Overview

Understanding LangGraph's unique positioning requires a look at its contemporaries:

LangGraph distinguishes itself through its superior state management, comprehensive debugging capabilities, and robust production-ready deployment features. While CrewAI excels in rapid prototyping and AutoGen provides strong conversational AI capabilities, LangGraph offers the most comprehensive and resilient solution for enterprise-scale, stateful AI applications. It's built for those who need fine-grained control and unparalleled reliability.

Benefits for Developers: Why Choose LangGraph?

For developers navigating the complexities of AI, LangGraph offers a suite of compelling advantages:

Scalable and Adaptable Architecture

LangGraph's modular design empowers developers to construct applications that scale effortlessly by simply adding more nodes to the network. The framework's architecture inherently supports horizontal scaling to manage traffic spikes and provides a robust infrastructure purpose-built for the unique challenges of stateful, long-running workflows inherent to advanced agents.

Unparalleled Debugging and Observability

The deep integration with LangSmith provides LLM-native observability, granting developers profound insights into their application's behavior. Furthermore, LangGraph Studio offers visual debugging capabilities with real-time graph visualization, intuitive state inspection, and the remarkable ability to modify state mid-execution. This comprehensive debugging environment includes execution history Browse, interactive panels for testing, and hot reloading for rapid development cycles, transforming the debugging experience.

Fostering Modular Code Design

LangGraph inherently promotes the creation of testable, reusable components through its node-based architecture. Each node can be independently developed, rigorously tested, and deployed, leading to more maintainable and reliable codebases. This modularity enables developers to cultivate libraries of common functionality that can be seamlessly shared across diverse agent implementations.

Production-Ready Deployment Capabilities

The framework meticulously addresses critical production challenges, including durable execution that persists through failures, comprehensive memory management with both short-term and long-term storage, and a production-ready deployment infrastructure. LangGraph Platform simplifies deployment with 1-click capabilities, effectively eliminating the traditional complexities associated with agent deployment.

The Future of AI Orchestration: LangGraph's Trajectory

Technological Roadmap Ahead

In order to further establish LangGraph as the leading agent orchestration framework, its future development will be firmly concentrated on a few important areas. As a specialized integrated development environment (IDE), LangGraph Studio will keep evolving by providing real-time debugging capabilities, improved collaborative development tools, and even richer visual graph representation. The platform will enhance its smooth integration with LangSmith for unmatched tracing and monitoring, and it will support hot reloading, guaranteeing quick iteration.

A primary goal is to expand integration, which includes forging closer ties with Hugging Face models, the OpenAI Assistants API, and other significant AI platforms. Additionally, the framework is moving toward declarative workflows, which will make agent definition and deployment even easier and more user-friendly.

The Evolution of the LangGraph Platform

With infrastructure designed especially for lengthy, stateful workflows, LangGraph Platform is at the forefront of agent deployment. The platform carefully tackles specific agent challenges, such as strong support for asynchronous agent-human collaboration, effective bursty traffic handling for schedule-driven workflows, and robust execution for tasks that could take hours or even days to finish.

With more than 30 endpoints and an API-first methodology, the platform enables developers to create unique user experiences that fit almost any interaction pattern. Everything from conventional chat interfaces to extremely intricate, multi-step business process automation is supported by this inherent flexibility.

Thriving Community and Broad Adoption

With almost 400 businesses already using the platform in production, the LangGraph community is expanding quickly. The commercial LangGraph Platform offers enterprise-grade capabilities for scaling complex AI solutions, while the framework's open-source status (MIT license) guarantees widespread accessibility.

Getting started with LangGraph is straightforward:

Bash:

pip install langgraph

The official GitHub repository at https://github.com/langchain-ai/langgraph provides comprehensive documentation, practical tutorials, and illuminating example implementations. The vibrant community actively contributes examples, tools, and integrations that continually extend the framework's capabilities.

Comprehensive Educational Resources

"Introduction to LangGraph," one of the priceless free comprehensive courses offered by the LangChain Academy, consists of 54 lessons and more than 6 hours of video content. These resources make LangGraph available to developers of all skill levels, covering everything from basic ideas to sophisticated multi-agent system design.

Conclusion: Orchestrating Intelligence, Unleashing Potential

With its move from basic chatbots to complex, stateful, multi-agent cognitive architectures, LangGraph represents a significant paradigm shift in the creation of AI applications. The framework's graph-based methodology offers the inherent flexibility, modularity, and visual clarity necessary to develop enterprise-grade AI systems that can effectively manage the complexity of the real world.

LangGraph's production readiness is clearly demonstrated by the quantified impact across major enterprises: Uber dramatically speeds up development cycles, AppFolio saves property managers over 10 hours per week, and Klarna's AI assistant efficiently completes the work equivalent of 700 full-time employees. These observable, practical implementations attest to LangGraph's unmatched capacity to produce substantial business value at scale.

With its comprehensive state management, sophisticated debugging tools, smooth multi-agent coordination, and reliable production-ready deployment infrastructure, LangGraph enables developers to overcome the drawbacks of conventional LLM chains. A comprehensive, integrated ecosystem for developing, testing, and implementing truly intelligent agents is produced by the framework's integration with LangGraph Studio for visual development and LangSmith for observability.

LangGraph is the fundamental framework that allows programmers to create the upcoming generation of intelligent applications as the AI landscape continues its unavoidable evolution toward increasingly complex agentic systems. LangGraph's strong architecture, demonstrated enterprise adoption, and quickly growing community make it more than just a tool for AI applications of today; it is the industry standard that will influence the development of intelligent systems for many years to come.

Modular, stateful, and cooperative agent systems are the way of the future for AI, and LangGraph offers the necessary framework to make this vision a reality. LangGraph provides the most complete, production-ready solution on the market today for developers ready to go beyond simple chatbots and produce genuinely intelligent, significant applications.